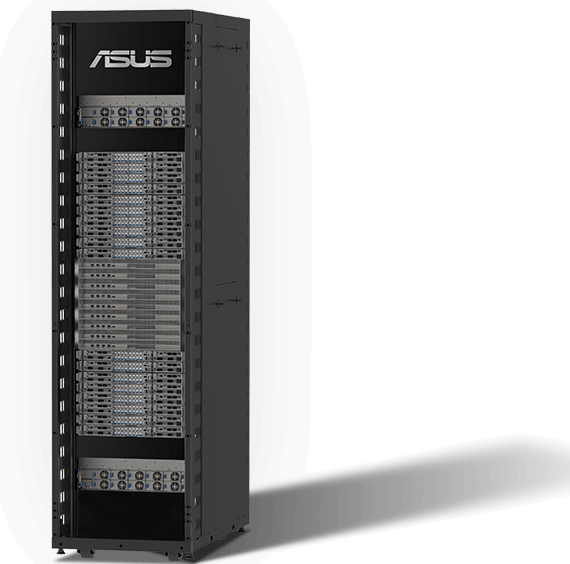

Artificial intelligence, or AI, is having a profound impact – reshaping our world at an unprecedented rate. To stay competitive, proactive management is essential, and ASUS AI infrastructure solutions are pivotal in navigating this evolving landscape. ASUS offers a comprehensive range of AI solutions, from AI servers to integrated racks, ESC AI POD for large-scale computing and the most important advanced software platforms, customized to handle all workloads, empowering you to stay ahead in the AI race.

ASUS excels in its holistic approach, harmonizing cutting-edge hardware and software, empowering customers to accelerate their research and innovation. By bridging technological excellence with practical solutions, ASUS pioneers advancements that redefine possibilities in AI-driven industries and everyday experiences.

Do you see AI as a potential solution for your current challenges? Looking to leverage AI to development the enterprise AI but worried about the costs and maintenance? ASUS provides comprehensive AI infrastructure solutions for diverse workloads and all your specific needs. These advanced AI servers and software solutions are purpose-built for handling intricate tasks like deep learning, machine learning, predictive AI, generative AI (GenAI), large langue models (LLMs), AI training and inference, and AI supercomputing. Choose ASUS to efficiently process vast datasets and execute complex computations.

AI supercomputers are built from finely-tuned hardware with countless processors, specialized networks and vast storage. ASUS offers turnkey solutions, expertly handling all aspects of supercomputer construction, from data center setup and cabinet installation to thorough testing and onboarding. Their rigorous testing guarantees top-notch performance.

Unimaginable AI. Unleashed.

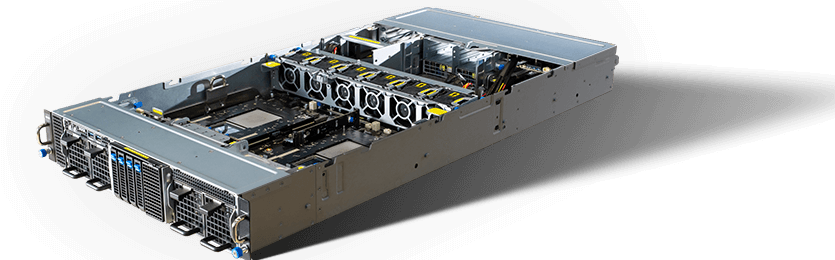

ASUS AI servers with eight GPUs excel in intensive AI model training, managing large datasets, and complex computations. These servers are specifically designed for AI, machine learning, and high-performance computing (HPC), ensuring top-notch performance and reliability.

Dedicated deep-learning training and inference

Empowering AI and HPC with excellent performance

Whether you're involved in AI research, data analysis, or deploying AI applications, ASUS AI servers, recognized for their outstanding performance and scalability to process complex neural network training, significantly accelerate training processes – fully unleashing the capabilities of AI applications.

Turbocharge generative AI and LLM workloads

Turbocharge generative AI and LLM workloadss

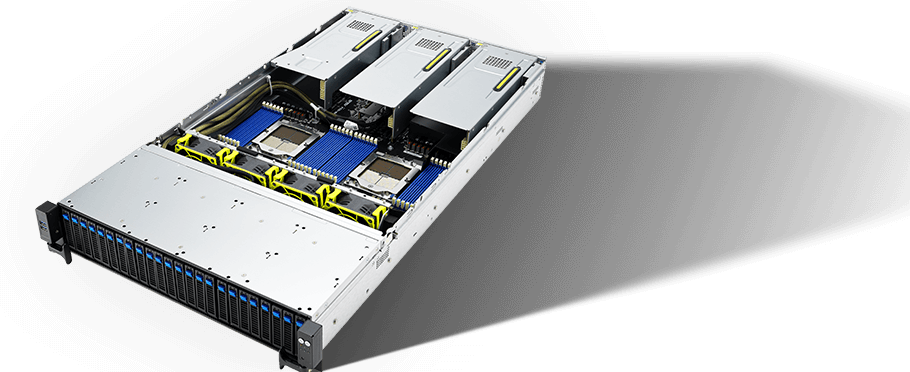

Many engineers and developers strive to enhance performance and customization by fine-tuning large language models (LLMs). However, they often encounter challenges like deployment failures. To overcome these issues, robust AI server solutions are essential for ensuring seamless and efficient model deployment and operation.

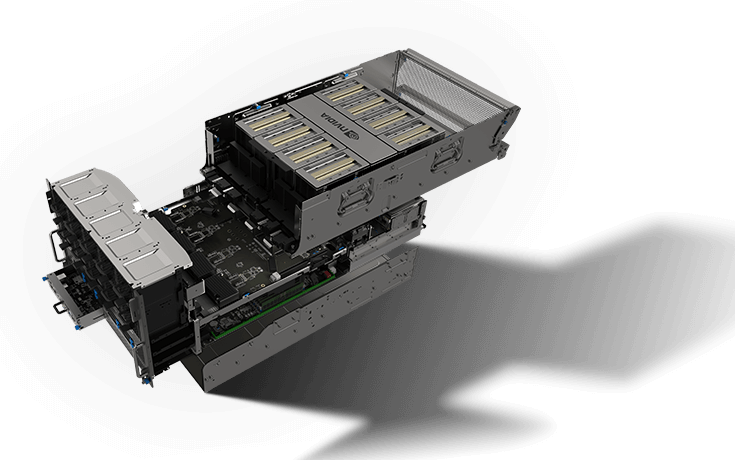

2U high-performance server powered by NVIDIA Grace-Hopper Superchip with NVIDIA NVLink-C2C technology

2U NVIDIA MGX GB200 NVL2 server designed for generative AI and HPC

Meticulously-trained machine-learning models face the ultimate challenge of interpreting new, unseen data, a task that involves managing large-scale data and overcoming hardware limitations. ASUS AI servers, with their powerful data-transfer capabilities, efficiently run live data through trained models to make accurate predictions.

Performance, efficiency and manageability with multi-tasking

Expansion flexibility and scalability to cover full range of application.

As manufacturing advances into the Industry 4.0 era, sophisticated control systems are required to combine edge AI to enhance processes holistically. ASUS edge AI server solutions provide real-time processing at the device level, improving efficiency, reducing latency and enhanced security for IoT applications.

Faster storage, graphics and networking capabilities

** Two internal SATA bays only for 650W/short PSU mode

Elevated efficiency, optimized GPU and network capabilities

** Non-GPU SKUs are designed for 0–55°C environments; and 0–35°C for GPU-equipped SKUs.

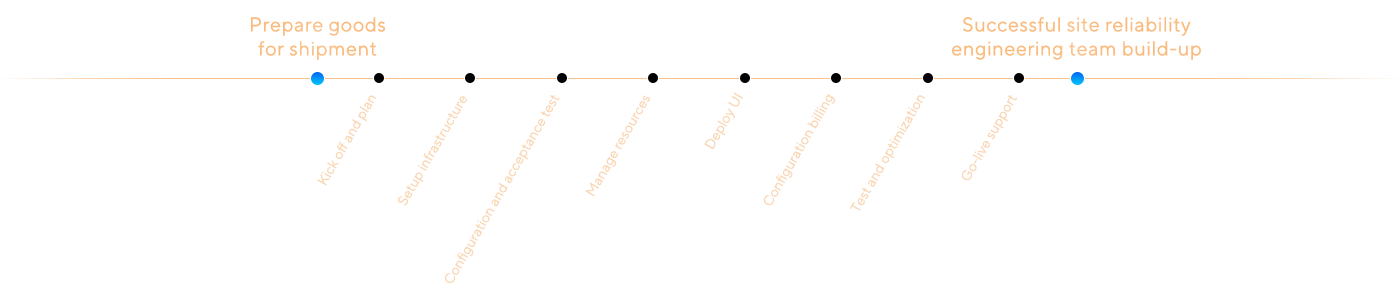

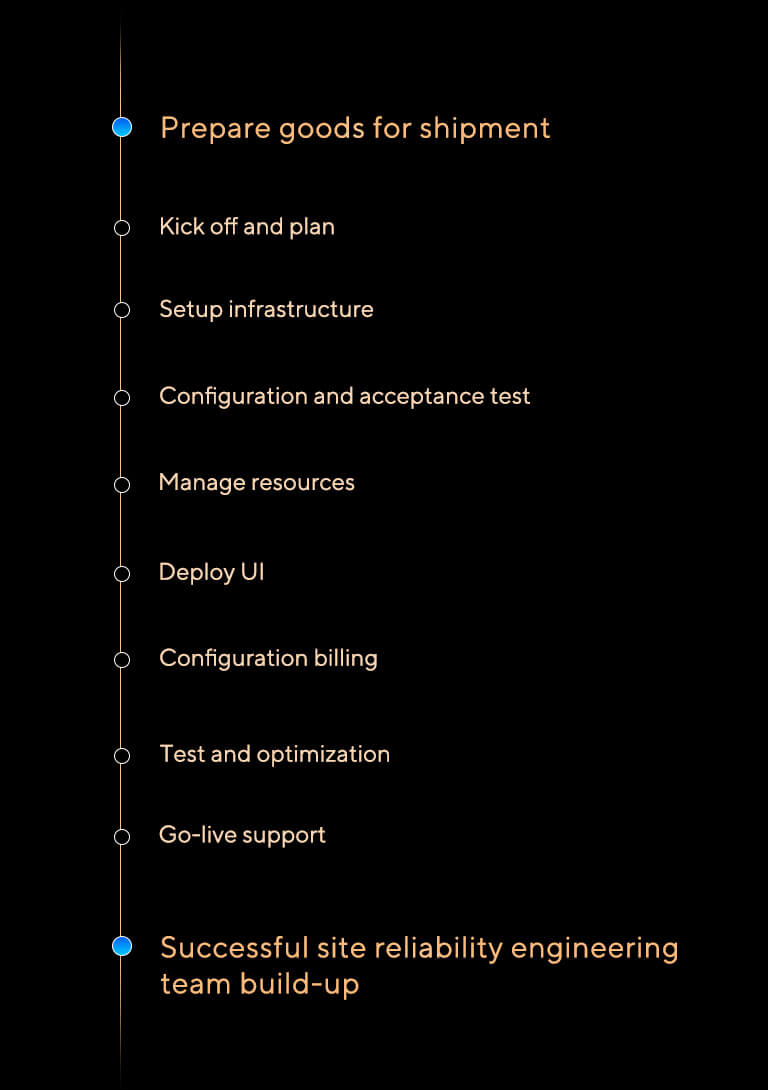

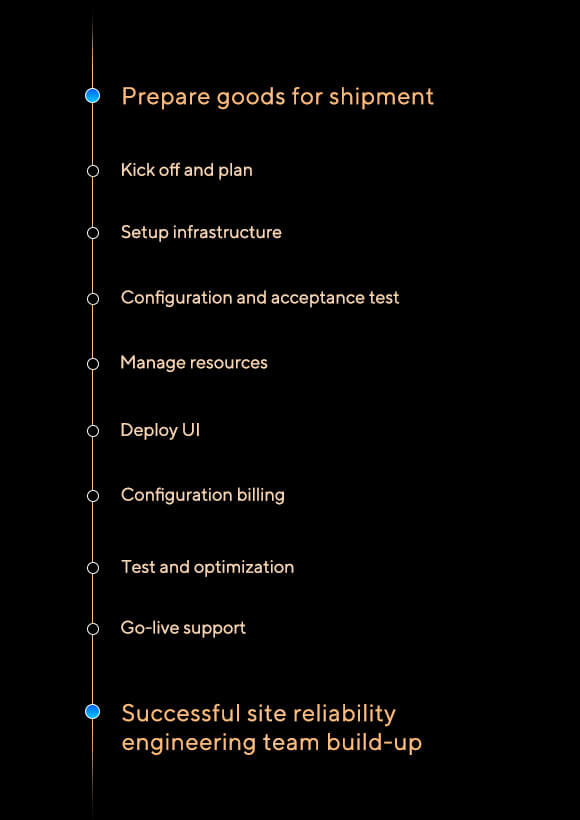

Invested in an AI server but don't know how to streamline its management and optimize performance with user-friendly AI software? ASUS and TWSC integrate advanced AI software tools and AI Foundry Services that facilitate the development, deployment, and management of AI applications. In just as little as eight weeks*, the ASUS team can complete standard data-center software solutions, including cluster deployment, billing systems, generative AI tools, as well as the latest OS verification, security updates, service patches and more.

Furthermore, ASUS provides crucial verification and acceptance services, including all aspects of the software stack, to ensure servers operate flawlessly in real-world environments. This stage validates compliance with all specified requirements and ensures seamless communication within each client's specific IT setup. The process begins with rigorous checks on power, network, GPU cards, voltage and temperature to ensure smooth startup and functionality. Thorough testing identifies and resolves issues before handover, guaranteeing that data centers operate reliably under full load.

The meticulous approach followed by ASUS AI infrastructure solutions provides scalability that can adapt seamlessly to your growing AI needs, ensuring flexibility and future-proofing your infrastructure.

*Please note that the delivery time for software solutions may vary ba sed on customized requirements and project scope of work.

Choose an ASUS AI server solution to enjoy significant time savings, reducing deployment time by up to 50% compared to manual setups – and allowing for seamless integration and enhanced performance. The expertise of specialist ASUS teams minimizes errors and downtime by preventing costly misconfigurations, ensuring that your servers run smoothly and reliably. With an intuitive interface and powerful automation features, ASUS simplifies server management, making it effortless to handle complex tasks.

Outshining other competitors, ASUS offers advanced computing and AI software services includes high-performance computing services, GPU virtualization management, integration with external systems for managing models, deployment of private cloud services, generative AI training, and integrated software and hardware solutions for data centers.

*The above service availability may vary by country or region.

The future of artificial intelligence is swiftly reshaping business applications and consumer lifestyles. ASUS expertise lies in striking the perfect balance between hardware and software, empowering customers to expedite their research and innovation endeavors

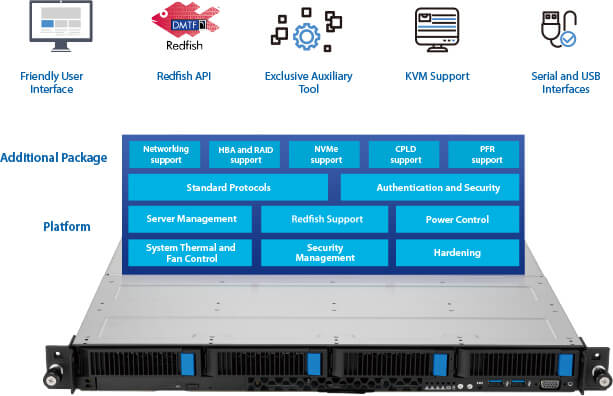

ASUS ASMB11-iKVM is an optimized firmware-management tool for server and data-center operations, and equipped with IPMI and Redfish protocols to access and monitor all hardware status, sensors and updates. Out-of-band management significantly reduces redundant IT operations and deployments remotely. Specifically, ASMB11-iKVM connects BIOS, BMC, server information and key parts collectively, offering multiple routes to serve almost any need – making it quick and easy to improve IT operational efficiency.

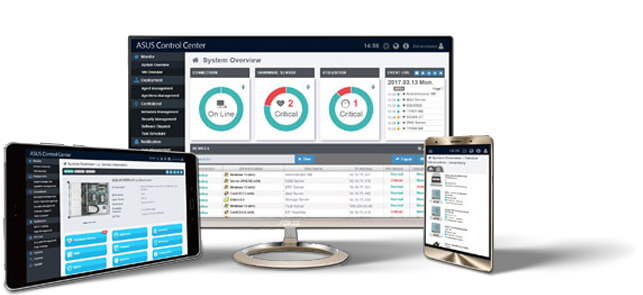

ASUS Control Center (ACC) is an enterprise-grade centralized management tool for servers and client devices. It is tailored for efficient IT management, including both hardware- and software-inventory management, and the remote dispatch of both software and firmware updates. It also allows for simple remote device configurations and health checks, plus rapid deployment of latest security policies and patches. In short, ACC is a one-stop portal for IT management, and has been embraced by industries and businesses globally to minimize administration and maximize uptime.

In the rapidly evolving landscape of artificial intelligence, businesses need robust solutions that can be deployed swiftly and operate reliably under service level agreements (SLAs) that are fit for enterprise. The AFS POD solution, designed specifically for cloud service providers in the arena of generative AI, meets these needs by combining state-of-the-art technology with comprehensive service guarantees.

The system’s intuitive web portal allows both developers and IT administrators to access various generative AI services and resources easily. This user-friendly interface supports rapid workload deployment and real-time operational monitoring, ensuring a hassle-free rollout.

Four ASUS SLA assurances

The generative AI cloud service bridges the gap between hardware and software, streamlining resource management and offering a spectrum of tools and billing options. Our service takes cloud computing to the next level by introducing highly-customizable features and processes tailored to meet the diverse needs of different operators.

Unleash the full potential of NVIDIA Omniverse™ with ASUS AI server solutions. Designed to accelerate 3D design collaboration and simulation, ASUS servers, powered by NVIDIA AI Accelerator solutions, offer seamless integration with Omniverse, delivering unparalleled performance, reliability, and scalability from on-premises to hybrid cloud solutions.

AI servers from ASUS serve as ideal platforms for AI services, ensuring seamless compatibility and operation for any

AI applications clients wish to deploy. ASUS provides assistance in service scheduling, resource monitoring, and

ensuring smooth operations on top of ASUS hardware.

ASUS understands the critical role of hardware in unlocking the full potential of AI. Our platform seamlessly

integrates fine-tuning and inference capabilities directly with computing servers, network topologies, and high speed

storage solutions, optimizing systematic performance and accelerating your AI initiatives.

We partner and integrate related solutions including solutions like WEKA, AIHPC, Asia-Pacific Intelligence, TWSC and

more. These solutions also integrate third-party AI applications for comprehensive functionality. By leveraging our

platform, you can experience faster inference, reduced latency, and lower resource consumption without sacrificing

accuracy. Deploy cutting-edge AI models quickly and efficiently, bringing your innovative ideas to life and delivering

state-of-the-art solutions to market ahead of the competition.